Adventures in Data: Tackling the TEC Network #1

Hey there! 👋 Diving into my latest project, I'm all about wrangling data to shed some light on the TEC network. We're mixing theory with the thrill of real-time data. In this kickoff blog, we're not jumping straight into analysis. Instead, we'll lay the groundwork with the data itself. Down the road, I'm planning to craft a user-friendly, real-time app and eventually deep-dive into analyzing TEC's performance. Let's roll!

The Quest for Data

1. Theoretical Data: GTFS

- What's GTFS? Picture this: a comprehensive map of the TEC network. It's a static dataset packed with stops, lines, schedules, and whatnot.

- Static but Crucial: This dataset isn't about real-time changes; it's our baseline for everything that follows.

2. Real-Time Data: The Live Pulse of TEC

- The Hunt: The journey to get this data was quite the saga. Initially, I sent an email and waited 10 days... and nothing. So, I switched to snail mail, waited another 20 days, and voilà – finally got the access token. The data treasure is now mine!

- The Juice: This is where things get exciting. We're talking updates every 5 seconds. Imagine, live bus locations streaming in almost real-time. There's also a bunch of alert data, but I'm tabling that for now. Our focus is on the buses and their routes.

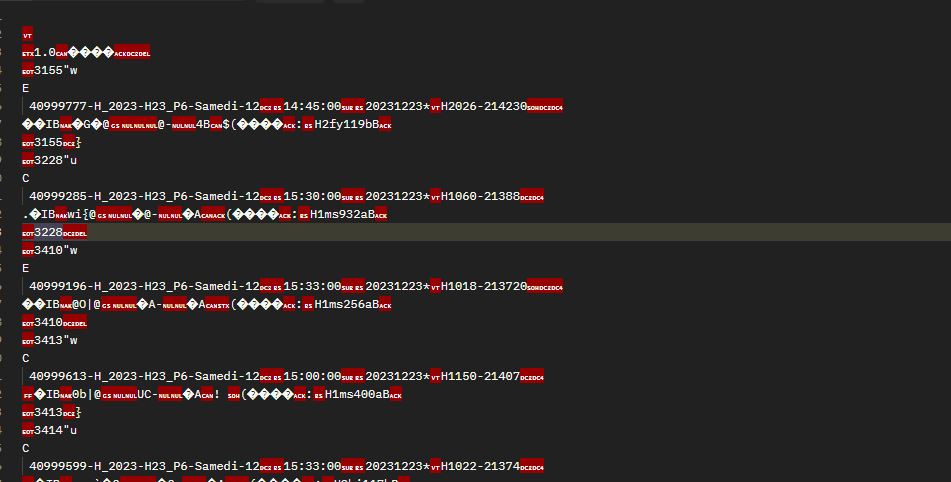

the protobuf file from LETEC.

Making It All Work

Why Rust?

Decision Time: Python or Rust? Rust won. It's all about that performance edge. Python's cool, but Rust? It's the speed demon I needed.

Tools of the Trade: Initially tried

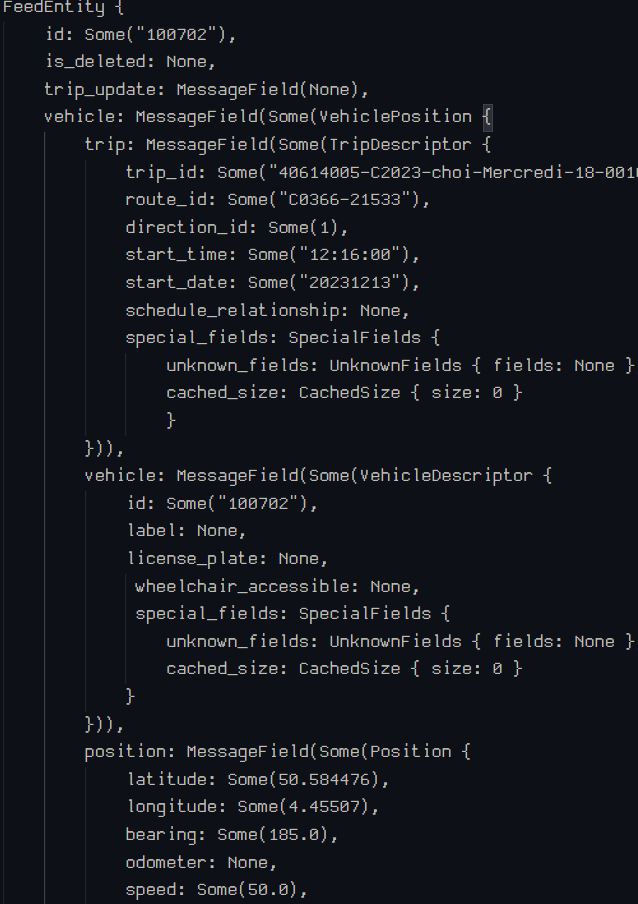

reqwest, but hit a snag with memory leaks. Switched toureqand keptrust-protobuffor data parsing.Ready to decode: Now that we have data and a parser, it's time to decode the protobuf file. Here's the result:

Maybe Printing Data?

Tech Stack: Eyeing an app alongside the analysis, I leaned on

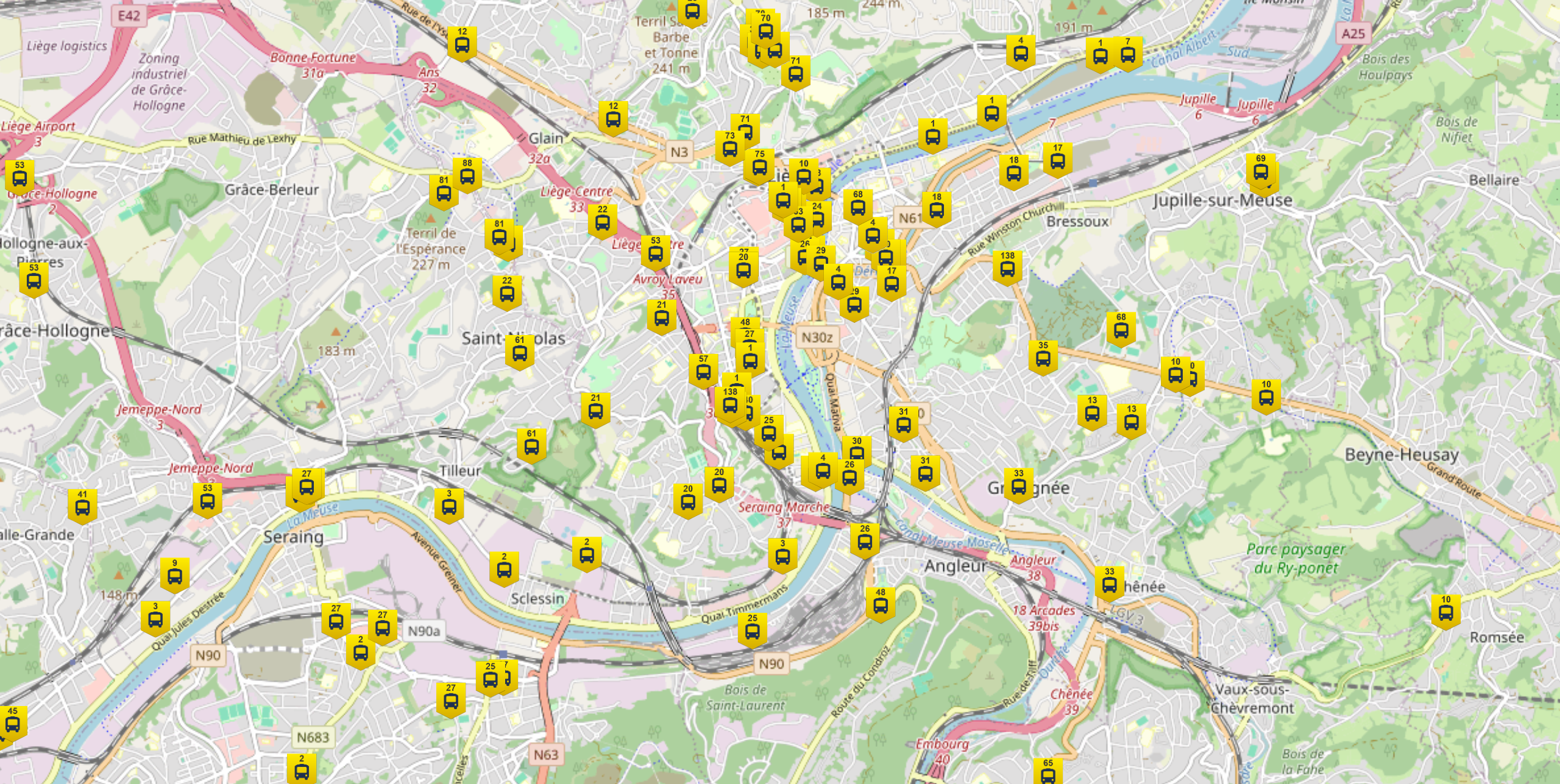

nextjs– I'm pretty comfy withReact.Initial Hurdle: The real puzzle was figuring out the best way to visually represent our data. After some brainstorming, I decided on

deck.glfor creating a massive, interactive map of Wallonia with WebGL for performance. But that wasn't all – I also realized the need for a robust sharing component on the backend.

(Nothing to see here, just a map of Wallonia.)

Breaking Through:

Axumto the rescue! Already familiar with it and its websocket support, plus it's a performance powerhouse. I've added a websocket endpoint/wsthat sends the data every 5 seconds to the user. A bit of coding (and coffee!) later, and I've got real-time bus positions streaming in.First Glimpse: Managed to display a Wallonia-wide map showcasing bus locations. It's a work in progress, but hey, it's a start.

Tweaking Data: Initially, the data was chomping up bandwidth (100-200kb every 5 seconds). Not terrible, but could be better. Solution? Shrinking data size with base64, then compressing with gzip. It's a start – reduced the data size by 10-20x.

Client side:

ws.onmessage = (message) => { try { let ds = new DecompressionStream("gzip"); let decompressedStream = message.data.stream().pipeThrough(ds); new Response(decompressedStream).text().then(e => { const data = JSON.parse(atob(e)) setBuses(data) }) } catch (e) { console.log(e) } }Server side:

loop { let datas = store.retrieve_json().await; let message = general_purpose::STANDARD.encode(&datas); let message = match compress_string(&message) { Ok(message) => message, Err(e) => { println!("Error compressing message: {}", e); return; } }; let message = Message::Binary(message); if socket.send(message).await.is_err() { println!("Error sending message"); return; } sleep(std::time::Duration::from_secs(5)).await }Future Thoughts: Sending all the data isn't the most efficient. Perhaps a quadtree approach for just viewport data could cut down transmission load. Food for thought, but not the current focus.

The Journey

- Blog Mission: Fetch data and show it off.

- What We Achieved: We're fetching and displaying data on a map, and doing it pretty efficiently. It's not the final form, but a promising beginning, and there's room to grow and improve.